I guess that would just be a GPU?

Actually would either be a TPU (tensor processing unit) or NPU (neural processing unit). They’re purpose built chips for AI/ML stuff.

I guess that would just be a GPU?

Actually would either be a TPU (tensor processing unit) or NPU (neural processing unit). They’re purpose built chips for AI/ML stuff.

You can actually promote a pawn to any other piece as well (rook, bishop, knight, etc.), this is known as underpromotion. It’s mostly a “why would you ever do that?” thing, though.

I agree with the other poster; you should look into proxmox. I migrated from ESXi to proxmox 7-8 years ago or so, and honestly its been WAY better than ESXi. The migration process was pretty easy too, i was able to bring over the images from ESXi and load them directly into proxmox.

thats because they legally cant include the cores in the steam version. You’re able to go add any additional cores you want, however.

Running arr services on a proxmox cluster to download to a device on the same network. I don’t think there would be any problems but wanted to see what changes need to be done.

I’m essentially doing this with my set up. I have a box running proxmox and a separate networked nas device. There aren’t really any changes, per se, other than pointing the *arr installs at the correct mounts. One thing to make note of, i would make sure that your download, processing, and final locations are all within the same mount point, so that you can take advantage of atomic moves.

You’re talking about XMPP, and it was google with google chat that people refer to with it.

That said, there’s a lot of details that story people throw around about google killing it that lacks some details. Specifically that the premier service that used and developed the standard, jabber, was acquired by cisco like 8 years before google supposedly killed it, which i would argue affected it far harder than google chat did.

It’s also lacking a lot of modern features that were becoming staple around the time that it was killed; i.e. QoS, assured delivery, read receipts, and a few other things. I still don’t think the protocol supports them.

Also, the protocol still exists and is used. It’s used by microsoft in skype for business, it’s also the IM protocol for lots of gaming platforms like origin, playstation, the switch (for its push notifications for their online service), League of legends, fortnite, and others. It’s still a reasonably popular standard when it comes to chat programs, though none of them that i’m aware of use the actual federation piece of it to talk to each other.

While the tactic alluded to does exist (“embrace, extend, extinguish”), i’ve never been necessarily convinced that google “kiled” xmpp, as its been around a long time and continues to be for various reasons. Even with google chat, it was never a ‘front end’ thing many users even thought about, because it’s back end frameworks tech, and it continues to be so in lots of different places today. I’m reasonably sure that the people who get upset about it and proclaim google killed it are basically just upset that it didn’t become the defacto chat standard today, which i would argue almost nothing is the defacto standard anyways, unless you count discord which kinda came out of nowhere like a whirlwind and took over the chat space and has nothing to do with any XMPP drama.

Ultimately, its up to you (whoever is reading this) to look into the facts of the matter and decide for yourself if that’s what really happened, but keep in mind, the people who usually repeat the anecdote about how google killed it have an agenda to push. I’m personally skeptical, because there’s reasons for google to have dropped it (see mentioned limitations above), and even back then, it wasn’t that outrageously popular. In fact, i would argue its more widely used today than it was back then, but i have no hard numbers on that.

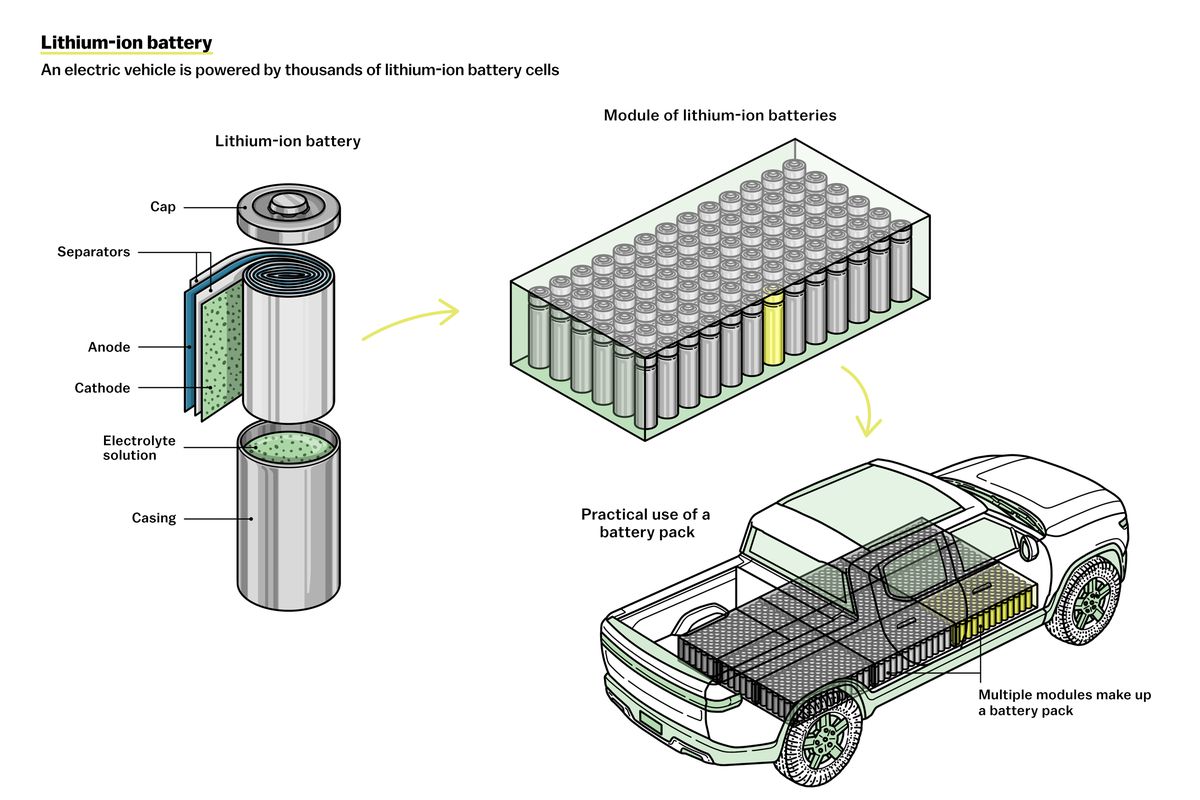

I mean, this is still more or less what the fast charging standards do; they’re pouring more power into it faster with higher bandwidth cables and sectioned charging.

The level 3 fast charger is basically the equivalent of 4 power cords from your wall. Also, adding more and more hardware and things for it will effectively make the electronics more complicated, which means more expensive, difficult to manufacture and repair

But also, as you scale this up more and more you’ll start running into issues that make it difficult to start pulling more power; energy from the grid isn’t infinite

This is already what they do. Dry batteries that are bigger than about your phone are generally comprised a whole lot of battery cells. If you ever take em apart, you’d basically see the cells are made up of what looks like a whole bunch of AA batteries (but larger).

They do charge “in parallel”, but that’s limited by how much electricity you can feed through into the system as a whole, and doesn’t speed up the process, it just makes them all fill at about the same rate.

Making the cells swappable is basically what this video is about.

As of java 21, you can actually just use:

void main()

isnt that basically what government contracts are? subscriptions?

I have mediacom as well, but in a larger city of the midwest. They have datacaps here too, and i was paying about $100 for exactly this same plan up until a couple years ago. They started upgrading our speeds/caps because a new fiber company (metronet) is building in the area. Now i’m on 1 gbps down and a 4 TB cap. I still plan to switch to metronet when they finally light up my area, as its cheaper for the same speeds (plus no data caps)

Even more frustrating when you realize, and feel free to correct me if I’m wrong, these new “AI” programs and LLMs aren’t really novel in terms of theoretical approach: the real revolution is the amount of computing power and data to throw at them.

This is 100% true. LLMs, neural networks, markov chains, gradient descent, etc. etc. on down the line is nothing particularly new. They’ve collectively been studied academically for 30+ years. It’s only recently that we’ve been able to throw huge amounts of data, computing capacity, and time to tweak said models to achieve results unthinkable 10-ish years ago.

There have been efficiencies, breakthroughs, tweaks, and changes over this time too, but that’s just to be expected. But largely its just sheer raw size/scale that’s just been achievable recently.

I’m not sure what you’re trying to say here; LLMs are absolutely under the umbrella of AI, they are 100% a form of AI. They are not AGI/STRONG AI, but they are absolutely a form of AI. There’s no “reframing” necessary.

No matter how you frame it, though, there’s always going to be a battle between the entities that want to use a large amount of data for profit (corporations) and the people who produce said content.

most will just slow the rate your deck discharges (if you’re using it), you need a high output charger to actually charge it. Anker makes a few that will, i personally have the 737 and think its worth every single penny with the steam deck.

to my understanding, C# is sort of a “second class citizen” on godot; There’s a lot of stuff you can’t quite do or is more clunky than using GDScript. But i also havent used godot enough to really weigh in on that (only a couple of small projects).

That said, while GDScript is very “python-like”, it is definitely not python. If you want to focus on C#, i would definitely echo the unity sentiment over godot.

All in all, the best way to learn is to just do it. Go out on youtube and find some tutorials, and just hunker down and try!

FWIW, at this point, that study would be horribly outdated. It was done in 2022, which means it probably took place in early 2022 or 2021. The models used for coding have come a long way since then, the study would essentially have to be redone on current models to see if that’s still the case.

The people’s perceptions have probably not changed, but if the code is actually insecure would need to be reassessed