Is this another thing that the rest of the world didn’t know the US doesn’t have?

- 6 Posts

- 31 Comments

274·1 year ago

274·1 year agoEvery right-wing accusation is a confession.

many years now

This appears to be an escalating fraud, affecting newer models more than old. So I’d guess that’s ^^ the answer.

It’s not just a Reuters investigation, they’ve been fined by a few jurisdictions and they absolutely do have the ability to pay lawyers to defend those charges if they’re false.

1·1 year ago

1·1 year agoThey don’t seem to list the instances they trawled (just the top 25 on a random day with a link to the site they got the ranking from but no list of the instances, that I can see).

We performed a two day time-boxed ingest of the local public timelines of the top 25 accessible Mastodon instances as determined by total user count reported by the Fediverse Observer…

That said, most of this seems to come from the Japanese instances which most instances defederate from precisely because of CSAM? From the report:

Since the release of Stable Diffusion 1.5, there has been a steady increase in the prevalence of Computer-Generated CSAM (CG-CSAM) in online forums, with increasing levels of realism.17 This content is highly prevalent on the Fediverse, primarily on servers within Japanese jurisdiction.18 While CSAM is illegal in Japan, its laws exclude computer-generated content as well as manga and anime. The difference in laws and server policies between Japan and much of the rest of the world means that communities dedicated to CG-CSAM—along with other illustrations of child sexual abuse—flourish on some Japanese servers, fostering an environment that also brings with it other forms of harm to children. These same primarily Japanese servers were the source of most detected known instances of non-computer-generated CSAM. We found that on one of the largest Mastodon instances in the Fediverse (based in Japan), 11 of the top 20 most commonly used hashtags were related to pedophilia (both in English and Japanese).

Some history for those who don’t already know: Mastodon is big in Japan. The reason why is… uncomfortable

I haven’t read the report in full yet but it seems to be a perfectly reasonable set of recommendations to improve the ability of moderators to prevent this stuff being posted (beyond defederating from dodgy instances, which most if not all non-dodgy instances already do).

It doesn’t seem to address the issue of some instances existing largely so that this sort of stuff can be posted.

0·1 year ago

0·1 year agoThere is this thing called context. And you forgot all about it.

0·1 year ago

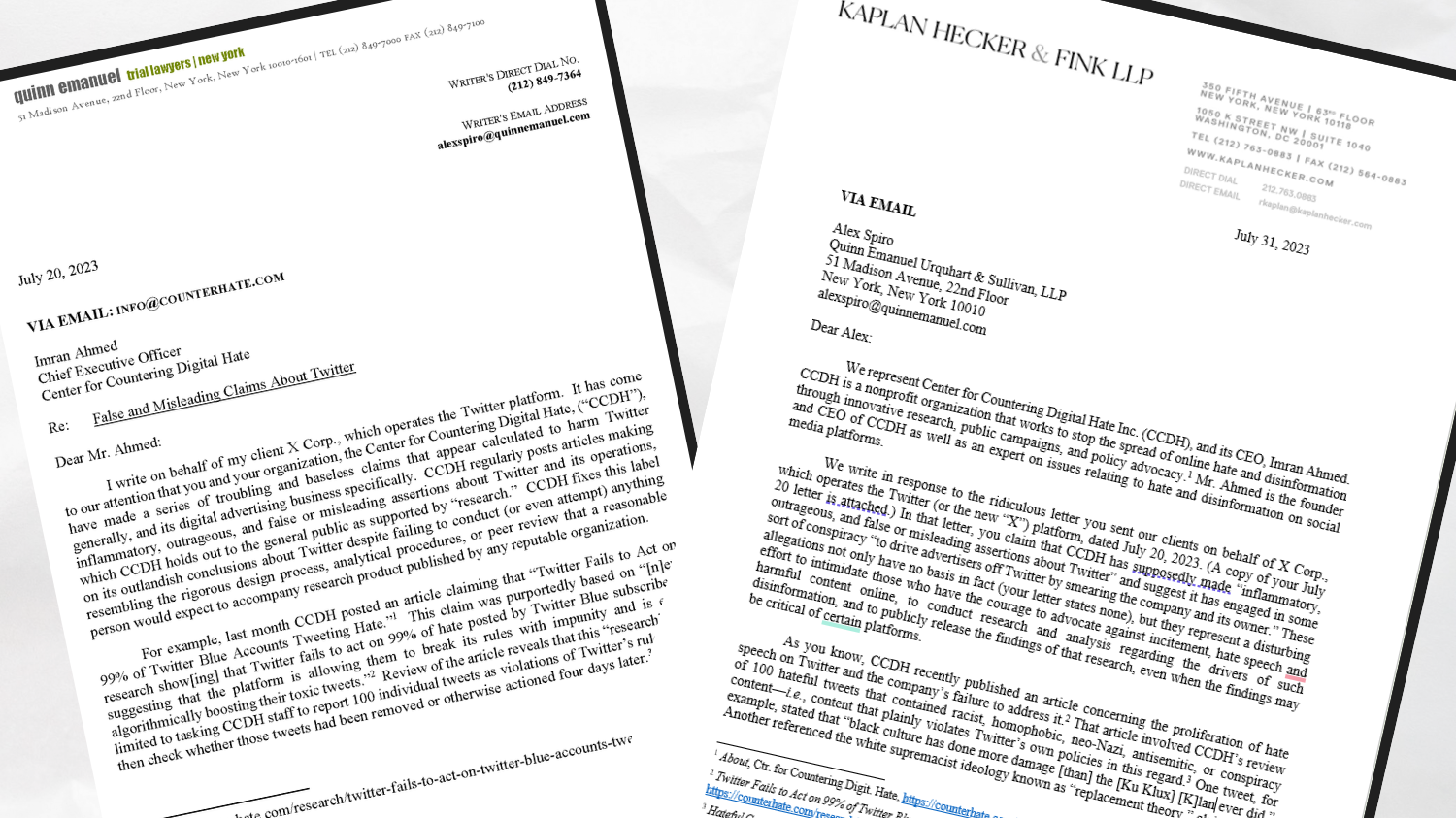

0·1 year agoNot defending the thin-skinned rich kid but the tweeter formerly known as @X didn’t lose anything apart from the name. Anyone can change their @name on Twitter and keep the rest of the account intact. Twitter changed his @name so that they could change their @name. Nothing else changed on either account.

31·1 year ago

31·1 year agoThere are exceptions to the rule, and this is one of them.

The rule works so well because journalists who can make a statement of fact, make a statement of fact. When they can’t stand the idea up, they use a question mark for cover. eg China is in default on a trillion dollars in debt to US bondholders. Will the US force repayment? .

This is an opinion piece which is asking a philosophical question. The rule does not apply.

6·1 year ago

6·1 year agotbf this is not very much different from how many flesh’n’blood journalists have been finding content for years. The legendary crack squirrels of Brixton was nearly two decades ago now (yikes!). Fox was a little late to the party with U.K. Squirrels Are Nuts About Crack in 2015.

Obviously, I want flesh’n’blood writers getting paid for their plagiarism-lite, not the cheapskates who automate it. But this kind of embarrassing error is a feature of the genre. And it has been gamed on social media for some time now (eg Lib Dem leader Jo Swinson forced to deny shooting stones at squirrels after spoof story goes viral)

I don’t know what it is about squirrels…

6·1 year ago

6·1 year agoThis lil robot was trained to know facts and communicate via natural language.

Oh stop it. It does not know what a fact is. It does not understand the question you ask it nor the answer it gives you. It’s a very expensive magic 8ball. It’s worse at maths than a 1980s calculator because it does not know what maths is let alone how to do it, not because it’s somehow emulating how bad the average person is at maths. Get a grip.

181·1 year ago

181·1 year agoIt’s OK. Ordinary people will have no trouble at all making sure they use a different vehicle every time they drive their kid to college or collect an elderly relative for the holidays. This will only inconvenience serious criminals.

1·1 year ago

1·1 year agoBoth articles point out that it is not possible to calculate because Tesla is withholding some of the necessary data (and has asked the NHSTA to redact the rest).

The WaPo gets closest to naming all the data you would need (miles driven in FSD; type of road being driven on) but you’d also need to match it to the Tesla owner demographic (wealthy, white, middle-aged men), and cars with an NHSTA 5 rating for structural safety.

I have no doubt that most of the autopilot miles driven are in Teslas, thanks to Musk’s reckess promotion of it as FSD.

To know whether the technology fails more or less often than other autopilot systems, you would need data that Tesla are withholding, and much more detailed data on other cars than you can google up on the NHSTA website.

Which is why I asked if you were sure.

Because I don’t think you can possibly be sure. But you say you are, so say why you’re sure.

3·1 year ago

3·1 year agoThe NHSTA ratings are based on crash tests. Not real world accident statistics.

You didn’t read your own link but did you read the article I linked to (and ideally the WaPo article it links to, for more detail)?

If so, I ask again, are you sure about that?.

2·1 year ago

2·1 year agothey have lower than average road fatalities

23·1 year ago

23·1 year ago*accurately

43·1 year ago

43·1 year agoYou’re agreeing with me but using more words.

I’m more annoyed than upset. This technology is eating resources which are badly needed elsewhere and all we get in return is absolute junk which will infest the literature for decades to come.

11·1 year ago

11·1 year agoEfficacy of prehospital administration of fibrinogen concentrate in trauma patients bleeding or presumed to bleed (FIinTIC)

48·1 year ago

48·1 year agoSam Altman is a know-nothing grifter. HTH

75·1 year ago

75·1 year agoThey cannot be anything other than stochastic parrots because that is all the technology allows them to be. They are not intelligent, they don’t understand the question you ask or the answer they give you, they don’t know what truth is let alone how to determine it. They’re just good at producing answers that sound like a human might have written them. They’re a parlour trick. Hi-tech magic 8balls.

142·1 year ago

142·1 year agoIn context. And that is exactly how they work. It’s just a statistical prediction model with billions of parameters.

Yes they are. Probably not in the country that calls it transit, mind. And lots of people would like to be able to have more private conversations in public, whether or not they’re travelling at the time.

Plus, I’ve seen a lot of threads over the years from gamers, or the people who have to live with them, looking for something exactly like this.